Automation Academy: Apple Intelligence, GPT-5, and the ‘Use Model’ Action in Shortcuts

As I mentioned in my iOS and iPadOS 26 review, I find Shortcuts’ ‘Use Model’ action one of the more interesting additions to Shortcuts this year (that is, besides the excellent Mac-only automation features) and a fascinating example of how Apple can leverage its third-party app ecosystem to sidestep the fact that they’re behind other companies in the development of a large language model.

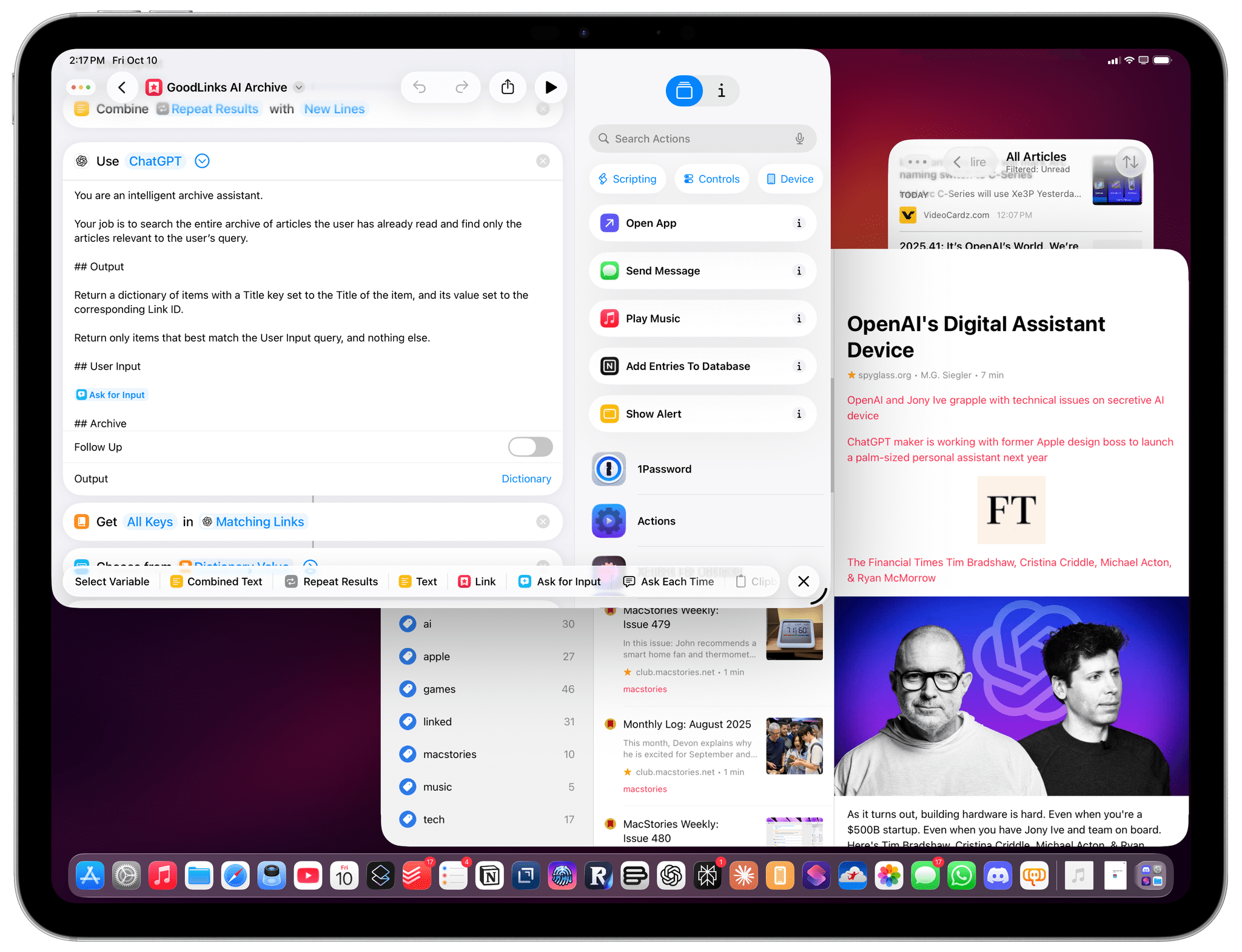

In this article, I want to highlight and explain how I’ve been taking advantage of the ‘Use Model’ action to add AI features to my favorite apps that their developers didn’t create in the first place. This is the most fascinating aspect of Apple’s AI strategy this year (arguably, even more so than the Foundation Models framework for on-device AI) and it shows how the company’s partnership with OpenAI can be especially fruitful when GPT-5 is put in the hands of Shortcuts power users.

But first, in case you missed it, some necessary context. The ‘Use Model’ action is a new action of the Shortcuts app for iOS, iPadOS, and macOS 26 that lets you ask questions to Apple’s on-device Foundation model, the larger one hosted on Private Cloud Compute, or ChatGPT, which is powered by GPT-5. What’s unique about this action isn’t that it can generate responses; it’s that it also understands native Shortcuts variables and can be configured to return data in a deterministic format, despite the non-deterministic nature of LLMs. Here’s how I described the action in my review:

in addition to freeform prompting and the ability to ask follow-up questions, you can pass Shortcuts variables to the action and explicitly tell the model to return a response based on a specific schema or type of variable. Effectively, Apple has built a Shortcuts GUI for what third-party developers can do with the Foundation model in Swift code, but they’re letting people work with the Private Cloud Compute model and ChatGPT, too.

And:

The true potential of the Use Model action as it relates to automation and productivity lies in the ability to mix and match data from apps (input variables) with structured data you want to get out of an LLM (output variables). This, I believe, is an example of Apple’s only advantage in this field over the competition, and it’s an aspect I would like to see them invest more in. Regardless of the model you’re using, you can tell the Use Model action to return a response with an ‘Automatic’ format or one of six custom formats:

- Text

- Number

- Date

- Boolean

- List

- Dictionary

With this kind of framework, you can imagine the kind of things you can build. Perhaps you want to make a shortcut that takes a look at your upcoming calendar events and returns their dates; or maybe you want to make one that organizes your reminders with a dictionary using keys for lists and values for task titles; perhaps you want to ask the model a question and consistently return a “True” or “False” response. All of those are possible with this action and its built-in support for schemas. What I love even more, though, is the fact that custom variables from third-party apps can be schemas too.

In this Automation Academy class, I’ll be focusing on variables from third-party apps and how I’ve built a suite of shortcuts that add new functionalities to two of my favorite iPhone and iPad apps: GoodLinks and Lire. I believe the integration between LLM, Shortcuts, and App Intents is a new frontier for hybrid automation; I hope this story will inspire you to come up with your own take on brand new features that don’t exist yet in the apps you use every day.

Let’s dive in.